This tutorial shows you how to setup a daily automated pull of Google Search Console data (formerly known as Google Webmaster Tools) using R. The example shows you how to save your Organic search data daily to get around Google’s current 90 day limit on historical data. Armed with this historical organic search data you can measure your content marketing and SEO efforts over months and years not just the previous 90 days. Take back your ‘not provided’ organic keyword data.

No previous knowledge of programming, APIs or R is necessary to complete this tutorial. Like my previous tutorial on getting started with the Google Analytics reporting API with Python (please check it out), I want to help you get started with a detailed example that you can use right now.

Google Search Console Data: What’s available via the API?

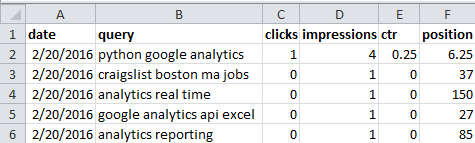

The data that we will use from the Google search console search analytics reporting includes: search query and organic landing page clicks, impressions, click-through rate, and ranking position. You can even see this search data for your images or videos indexed by Google. You can check out the full list of the data dimensions, metrics and breakdowns in Google Search Console’s documentation.

Google Search Console R Tutorial

2) Install R Studio. R Studio is an integrated development environment (IDE) that has a set of tools to make you more productive when working with R.

3) Launch R Studio and install the R packages we will use in this example: searchConsoleR & googleAuthR. In the Console module in R Studio (the bottom left pane) run the code below. Copy and paste or type each command shown below and press enter. This will install the packages via an online package repository called the Comprehensive R Archive Network or CRAN.

> install.packages("searchConsoleR")

> install.packages("googleAuthR")

When your packages install successfully you’ll see a message in the console that says “package successfully installed…”

4) Download the Google Search Console R Analytics Script

Save or copy the script below to your computer and open it in RStudio.

Google Search Console Service Account Authentication in R

5) Download and setup your client_secret.json. This tutorial uses a “server to server” authentication because we are going to be scheduling the R script to run daily via a batch file command. There is no web browser that is going to popup and let you authenticate and allow access to the R application. So you cannot use client side OAuth2 because we don’t have an “interactive environment” there is no web browser. You will be setting up a service account in the Google Developer Console that will be used in this tutorial.

Create a new Google Developers Console project with the Search Console API enabled. That link will automatically enable the search console API.

“Create a new project” should be showing in the drop down. Click continue to Create a new project.

Click on the “Go to credentials” button

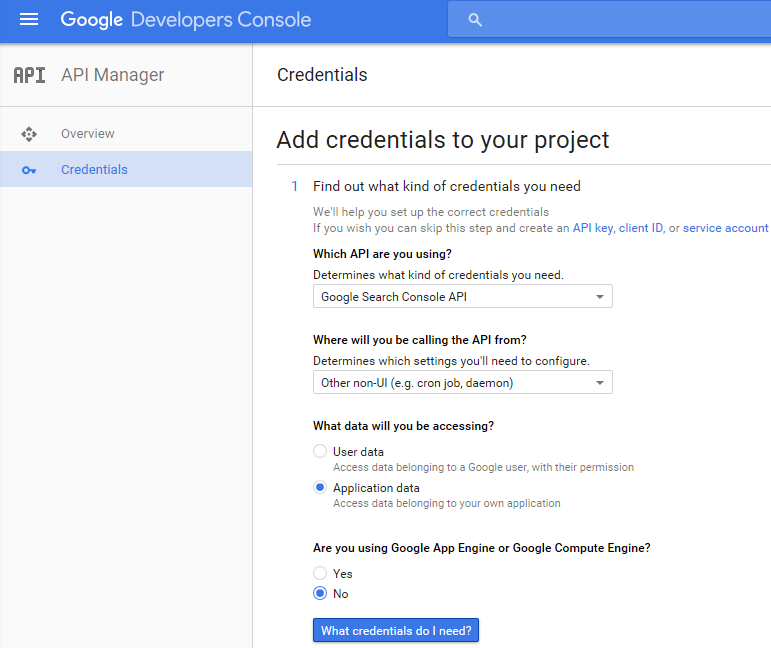

On the Credentials screen in the first drop down “Which API are you using?” it should have “Google Search Console API” preselected. In the second drop down “Where will you be calling the API from?” choose “Other non-UI (e.g. cron job, daemon)”. For “What data will you be accessing?” choose the radio button for “Application data” and for “Are you using Google App Engine or Google Compute Engine?” choose the “No” radio button. Then click on the button “What credentials do I need?”. See the screen shot below for the selections:

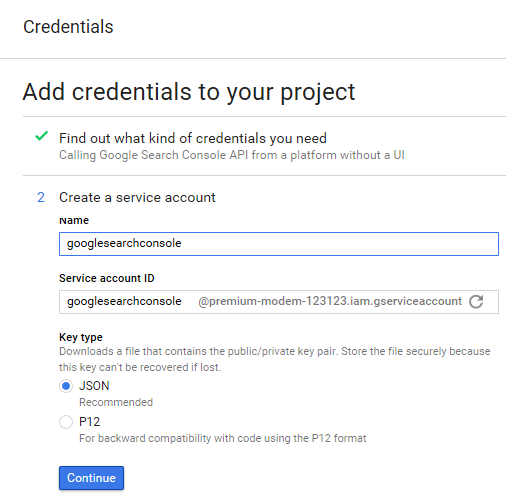

Type in a name for the “Name” field I named my service account “googlesearchconsole”. Make sure to copy and paste your service account id somewhere. This is your service account email address that you’ll need in step 6 below to allow access to your search console data for that user. Choose the “JSON” radio button for the “Key type” and click the “Continue” button.

When you click continue your JSON credentials will be downloaded. A message will popup that says “Service account created The service account “googlesearchconsole” was given editor permission for the project. The account’s public/private key pair My Project-67237f1e6539.json has been saved on your computer. This is the only copy of the key, so store it securely.”

Make sure that you listen to the warning, you can’t go back into the Developer Console and download another copy of the JSON file so save it somewhere safe.

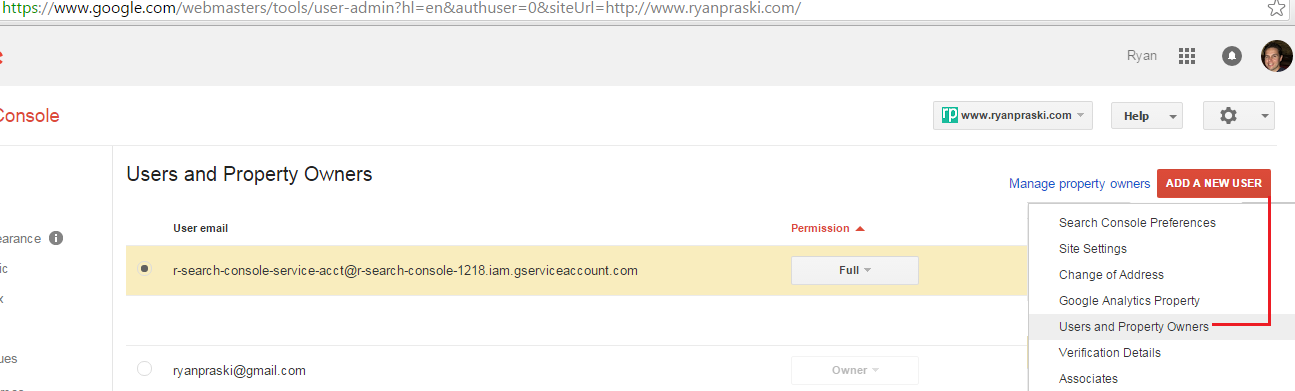

6) Give full permission for the service account email you just created in the admin of your search console account.

https://www.google.com/webmasters/tools/user-admin?hl=en&siteUrl=https://www.ryanpraski.com/

Replace http://www.ryanprask.com/ with your site URL for a direct link to the “Users and Property Owners” for your site Search Console permissions. Alternatively you can go to the Google Search Console home and click on your website (property). Then click on the gear icon in the top right > users and property owners. See the screen shot below.

Next click on the Add A New User button and paste in your service account email address and choose Full permission from the drop down.

Run the Google Search Console API Script with R

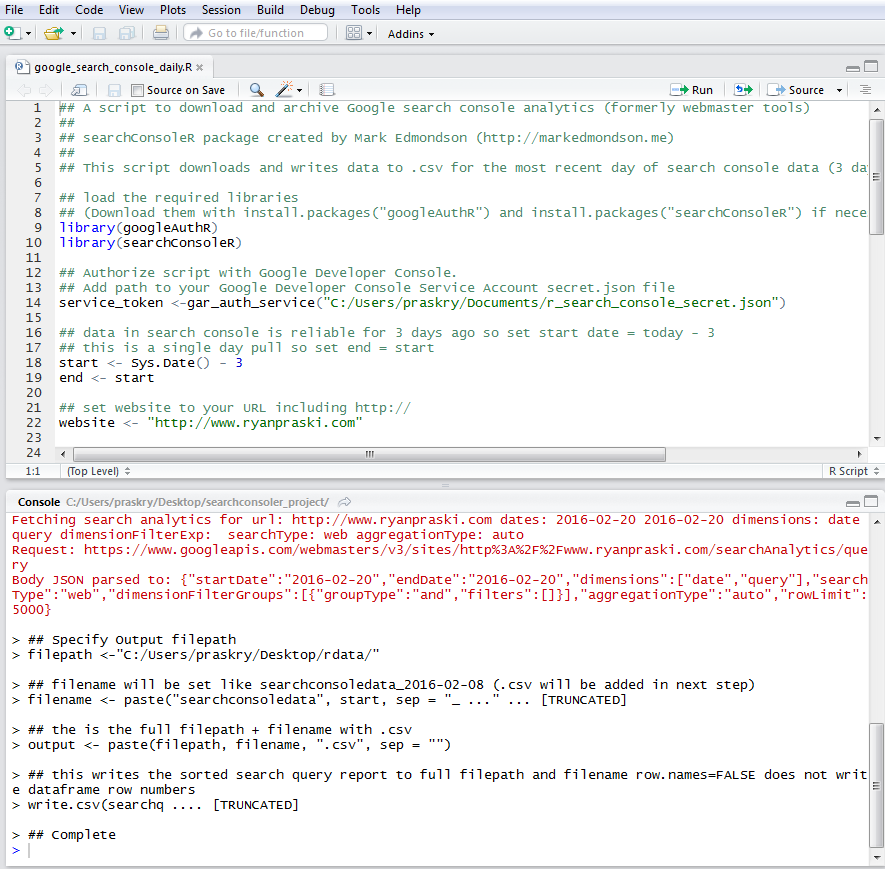

7) Open the google_search_console_daily.R script in RStudio that you downloaded in step 4 . The script will open in the top left source pane in RStudio. Make the edits below to configure the R script to pull your Google Search Console data.

• Line 14 add the path to your secret.json file for your service account that you downloaded and saved in step 5.

• Line 22 add the URL for your website as it appears in the search console (webmaster tools). Make sure to include http://.

• Line 45 add the file path you’d like to save the .csv Search Console data file that this R Script will create.

• Remember to save your R script after you make the updates.

8) Run your google_search_console_daily.R script. Click on Source at the top right of the top left pane or press Control + Shift + Enter. Alternatively highlight all the lines (select all) of the R Script and click Run at the top right next to the Source button. The screen shot below shows the script run successfully on my computer. As the script runs you’ll see the comments from the code printed in the Console pane at the bottom in black font. In red font you’ll see the URL query parameters used in request that is sent to the Search Console API end point. If everything has worked properly you will see ## Complete on the last line in the console. Your .csv file has been written to the folder you specified on Line 45 of the script.

9) Open the .csv search console data file in Excel. See the screen shot below for my search console query data. It looks like not a lot of people are searching for analytics related content on Google on a Saturday.

Automate a daily pull of the Google Search Console data

10) The original goal was to save a daily extract of the historical Google Search Console data so we could get around the 90 day Google limit on historical data.

Create a .bat file file in your text editor. This is an automated way to run our R Script via a DOS Command. See the sample file below. In the first part of your file you’ll have to replace my path with your path to your R installation. In second part of the file you will need to replace my path with the path to your google_search_console_daily.R script. Save this .bat file in the same directory as your google_search_console_daily.R Script.

@echo off "C:\Users\praskry\Documents\R\R-3.2.3\bin\x64\R.exe" CMD BATCH C:\Users\praskry\Desktop\google_search_console_daily.R

Create a task to run the R Google Search Console Task Daily

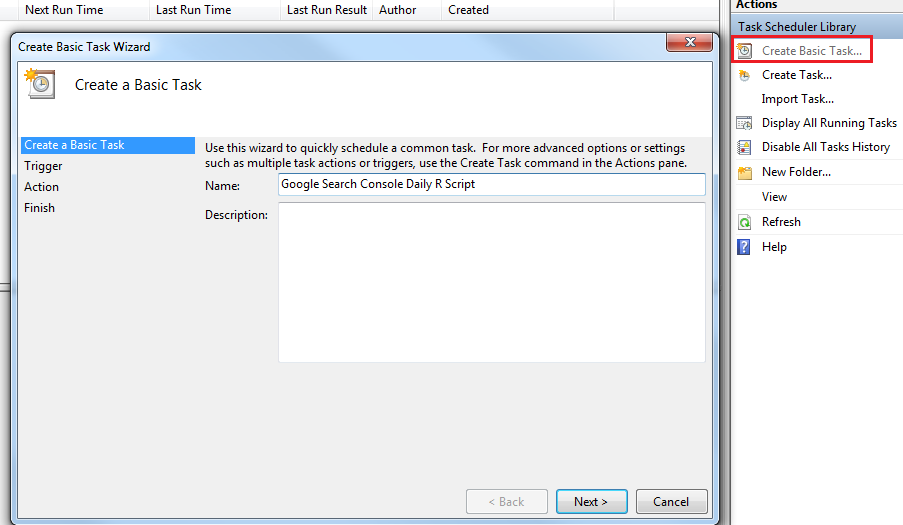

On Windows your Task Scheduler should be in your Control Panels > Administrative tools. Here is the path to the program on my computer.

Control Panel\All Control Panel Items\Administrative Tools\Task Scheduler

In right navigation under Actions click on Create Basic Task. This will launch an easy to follow Wizard that will guide you through the necessary steps to create your task.

Step 1 Create a Basic Task- Name your task Google Search Console Daily R Script then click next.

Step 2 Trigger- Choose Daily for When you want the task to start then click next.

Step 3 Daily- Choose a start time a few minutes in the future so we can test to make sure our R script runs via this scheduled task. Then click next.

Step 4 Action- Choose start a program. Then click next.

Step 5 Start a Program- Click on the Browse button next to the Program/script field and select your .bat file. Recall my file was located here on my computer: C:\Users\praskry\Desktop\google_search_console_daily_R\search_console_pull_task.bat Then click next.

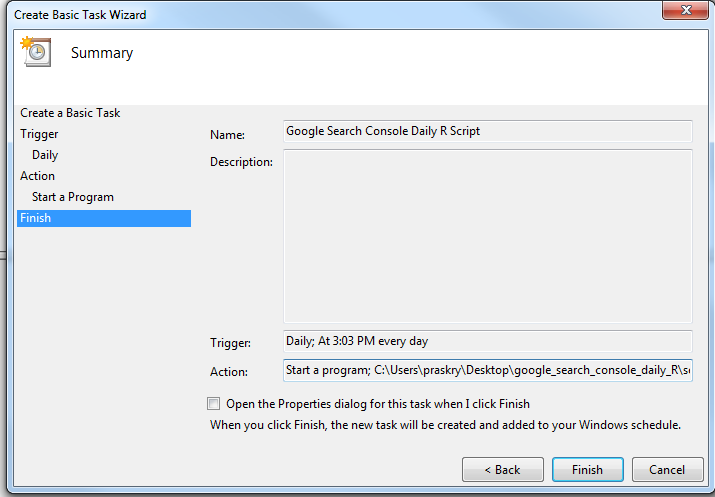

Step 6 Summary- This gives you a summary of the task including the trigger and action that were selected in the wizard. Then click finish.

After your task automatically runs, a new file called google_search_console_daily.Rout will be created in the same directory as your google_search_console_daily.R Script. You can open the “out” file via a text editor and it shows the details of the R Script that was run via our scheduled task and .bat file. If you R Script ran successfully your “out” file will show everything logged in the console shown in the screen shot in step 8 as well as time metrics showing how long the script took to run. If the script does not run successfully the “out” file will log the errors that occur. On the first run I would guess most errors for people will be around the file paths in the .bat file. If you have any questions getting this set up please let me know in the comments.

Automation Complete! Now everyday at 3:03pm my R Script will pull daily Google Search query data from the Google Search Console API automatically.

Combine your Daily Search Console CSV Files

Fast forward a few weeks. Our automated daily Google Search Console R API pulls are running smoothly. Now we want to put all the individual daily .csv files together for a full view of our search query data over time. To join the .csv files together we are going to use the R data.table package. Open RStudio. In the R console we are going to run the code shown below. For your file replace my path to list.files with your path to where the daily search console .csv files are saved on your computer. The pattern (file name filter) assumes that you didn’t change the names of your search console .csv files and all have “searchconsoledata” in the .csv file name. When you run this code you should see a dataframe in R studio called “combodata” with all search query .csv files combined for all the days the automated R Script has run.

> install.packages("data.table")

> library(data.table)

myfilename <- list.files("C:/Users/praskry/Desktop/rdata", pattern = "searchconsoledata", full.names = TRUE)

combodata <- rbindlist(lapply(myfilename,fread))

View(combodata)

Extending the Tutorial

If you have any questions please leave a comment below or contact me directly. After you setup your daily query data pull by following the steps in the tutorial there are a few options for extending the tutorial even further. Below I’ll show you how to update the R scripts to pull even more search console data.

Pull the Previous 90 Days of Google Search Console Data

I recommend you also pull historical data for the previous 90 days. This way you have all available historic data saved. To do this simply change the start and end dates in the R Script on lines 18 and 19 as shown below:

start <- Sys.Date() - 93 end <- Sys.Date() - 3

Batching Requests to pull more than 5000 Rows

On line 40 change rowLimit to a number greater than 5000 for example rowLimit = 100000, and add walk_data = c(“byDate”)) . This allows the script to batch the search console API requests by day to get the full data set when pulling the last 90 days of search data.

Pull Landing Page Google Search Console Data

The content on this site is for a very small niche audience, so I have a long tail of organic search terms. Google does not report on many long tail search queries in the search console reporting. The quote below is from the Google documentation.

“To protect user privacy, Search Analytics doesn’t show all data. For example, we might not track some queries that are made a very small number of times or those that contain personal or sensitive information.”

For my site this means many of the queries do not show in the search console. For my daily search console data pulls I also pull organic landing page data by changing the download_dimensions parameters in the R Script on line 25 as shown below:

## what to download, choose between data, query, page, device, country

download_dimensions <- c('date','query')

For my daily search console data pulls I also pull data for page broken down by query. See the changes to R Script on line 25 below:

## what to download, choose between data, query, page, device, country

download_dimensions <- c('date','page','query')

Pull Image or Video Google Search Console Data

Why would you want organic search data for images? Say you are a ski resort or a national park. Many of your visitors are searching for trail maps. You want to see historical organic search data to make sure trail map images from your site are ranking high and getting clicks. If images or videos indexed by Google drive organic traffic then you can get data on these content types by changing the type parameter on line 28 from ‘web’ to ‘image’ or ‘video’. See the example for ‘image’ below:

## what type of Google search, choose between 'web', 'video' or 'image'

type <- c('image')

If you have any questions or think of a great way to analyze or visualize the Google Search Console data you’ve pulled from the API with R let me know in the comments. Thank you to Mark Edmondson for creating the searchConsoleR & googleAuthR packages. My tutorial was inspired by and is based on my attempt to complete Mark’s tutorial post on how to automatically pull daily Google search console data.

test

Hi Ryan,

I’ve looked into both this and Mark’s tutorial but I’m still having a few issues. Using your method of authentication outside of scr_auth application with Google Search R resulted in some sort of improper file input, does the JSON file have to be in the working directory for it to pull correctly?

Best,

Hana

Hi Hana,

Can you double check that the path to the JSON file in your R Script is correct. If you still have issues can you share a screen shot of the error?

-Ryan

Hi Ryan, I am eventually getting the error

“Error: could not find function “rbindlist”” when trying to combine the data. Has something changed in the libraries or what might be the issue?

Hi Marc,

You need to install then include the data.table package to use the rbindlist() function.

-Ryan

Hi Ryan,

is there a way to get the data from an email with “Restricted” access to the site like with a different scope, or does the user have to have full access?

Hi Addie,

Yes the script should work with both “Full” and “Restricted” Google Search Console Users. Let me know if there is a specific error I can help you with.

-Ryan

This is the error I’m getting:

“Request Status Code: 403

Error in checkGoogleAPIError(req) :

JSON fetch error: User does not have sufficient permission for site “

Hi Addie,

Did you complete Step 5 & 6 from the post? The service account you create needs to be added as a user and given access to the account.

5) Download and setup your client_secret.json.

6) Give permission for the service account email you just created in the admin of your search console account.

I’ve been using this script and is a REAL time saver, I use it to extract my main queries on a daily basis the only downside is that google privacy policy doesn’t allow you to have all the kws.

Keep the good work Ryan!

hello, the script is awesome; however, I am encountering an issue I was hoping to get help on. When setup a batch for more than 10k rows to pull dimensions: ‘query’, ‘page’, ‘country’, ‘date’ I keep getting an error “Error in rbind(deparse.level, …) :

numbers of columns of arguments do not match”.

When I do 10k or less, it’ll work. Also, I use seo for excel too and I know that the data can be parsed that way because I do so with the GSC connector

thanks!

Hi Anthony,

Thanks for the question. It looks like there is a data anomaly in the Google Search Console data for September 2-6 2016. See Google’s response here: https://support.google.com/webmasters/answer/6211453#search_analytics

I’d suggest trying again in a few days. If your issue persists please let me know.

Best,

Ryan

Hi Anthony,

I tried running the script again this morning and it is working again on my end so Google must have resolved their issue. Is your query setup to walk_data byDate like the sample code below:

Great explanation, thank you !

Thanks for the tutorial.

Everything works perfectly fine up until trying to automate the download, do you have any tips on where I could be going wrong. I’m trying to save the output to a network location, could this be the issue? The task scheduler output doesnt record any problems whatso ever !

Hi Tope,

It could be an issue with the output on a network drive instead of the local drive. Try saving the output to a local drive instead. If you want to troubleshoot further email me ryan@ryanpraski.com

Best,

Ryan

Thanks Ryan, I’ve just given that a try too with no success. I’ll send you an email in the morning.

Hi Ryan,

I found an easy work around for my task scheduler issue. There is an R package that allows you to Schedule R scripts on Windows: https://github.com/bnosac/taskscheduleR

Hi Tope,

Glad to hear you got it working. taskscheduleR is a great package. It would be great if you could share your script. I’m sure other people would also value your solution.

Hey Ryan, sorry it wasn’t a script it was a add on. Link above.

Great writeup Ryan. As a developer, this looks like something that can be automated. Do you think, a web tool that downloads the data everyday would be helpful ?

Hi Ryan

I’m getting an error

Warning message:

In data_parse_function(req$content, …) : No data found

have you ever had the same problem?

Hi Artur,

I would suggest that you double check in the Google Search Console (fka webmaster tools) that the URL matches what you have in your R Script. I would also check that for the date range that you are pulling in R there is data in the Google Search console web interface.

-Ryan

But if i want download only positions, not ctr and impressions. How i can do that?

Hi Aleksandr,

The search console api query will return all metrics available for the dimension combination that you query. After the query is returned and the data is in an R dataframe you can exclude columns so only the average position shows. Below is one way create a new dataframe called dfposition that only includes specified columns

> dfposition View(dfposition)

https://uploads.disquscdn.com/images/b41f8e6396b08a50b07fbd1284566be5f2d831ea30663afc42a596bdf386ed50.png

Hi Ryan, this is great thank you. I’ve been having issues with authentication trying to get this to work across multiple domains. I’ve tried adding and verifying and additional domain the Google Cloud project credentials and generating a new key but keep getting a “JSON fetch error: User does not have sufficient permission for site”. Do I actually need to create a new Google Cloud project for every domain?

Hi Alex,

You should only have to create one project and one JSON key. You will have to repeat step 6 for each domain and give the service account access in the admin of the search console for each domain.

-Ryan

Thanks that was a quick response. That was what I was doing but I realised I made a stupid mistake and was calling the non secure version of the domain!

Do you have experience using list_crawl_error_samples? I keep running into the following error: Error in if (inherits(X[[j]], “data.frame”) && ncol(xj) > 1L) X[[j]] <- as.matrix(X[[j]]) :

missing value where TRUE/FALSE needed

any help would be greatly appreciated!

Hi Ryan,

I’m trying to import search console data into a database but i’m having some problems.

I’m using basic coding:

library(RODBC)

specificDB= odbcConnect(dsn =”DB”)

sqlSave(specificDB, data, tablename=’SEO’)

This is creating my “seo” table but is not making the insert of the data. Do you have any idea how to overcome this?

Hi Pedro,

Are you getting an error message when you try to save the Google Search Console data into a database?

-Ryan

Hi Ryan! Great piece of work! When I run the script it goes smooth but when I compare the numbers to Search Console e.g. impressions, my output is showing less than Search Console. I tried increasing the row limits, taking fewer days, etc.. Do you have an idea why?

Thanks so much for sharing this. It has been a real blessing!

Hi ryan, whenever I use the gar_auth_service function I get the the Bad Request(with 400 code) error. I’m sure I followed the steps u menitoned correctly( regarding Account Authentication and Json file). Can you help me please?

Are you sure you have access to the Google Search Console for the account that you are using? I’d also double check your credentials. If you are still having issues let me know.

Thank you for very quick answer. I created a new project, downloaded new json, and gave full access to new mail adresi; this time I get the error of unsufficient permission after several 403 error. Should I also give access for my subdomains like for example,example.com/cars.Second question, secret_json in your script code is json file downloaded in credentials section right?(file with very awkard name) Since my json have weird name, I could not be sure.Thank you for your help.

Sounds like it still is an authentication issue. I would recommend just starting from the beginning and going through each step. I would bet there is some minor detail that is causing your issue. Let me know when you get it figured out.

I have solved it, I made a silly mistake by not adding “/” at the end of my url 🙁 Thank you for help and the great article, It helped me a lot.

Glad to hear it was something simple. let me know if you have any other questions with pulling the Google Search Console data.

Hey Ryan , got this error

Error in is.data.frame(x) : object ‘searchquery’ not found

Hi Mahender,

Did you find a fix for the error?

Hi Vishal,

Can you share a screen shot of the R Studio error you are getting with the searchConsoleR code.

Best,

Ryan

Hi Ryan,

Thank you so much for your response. The script runs fine when doing it manually,it is the automation part that gives error.I am attaching the screen shot of the error. Let me know if you need the screen shot of the script as well.https://uploads.disquscdn.com/images/244f70c4981afa16f98c3a12f4b8b36f5126e09f39b18303e0026470a706422e.png

Hi Vishal,

It looks like you are trying to use scr_auth()

You can only use the json file with your client_id and client_secret because when automating you are in the “non interactive” environment as the error says. Try using the code that is included in the post without modifying when you automate it.

-Ryan

Hi Ryan,

It worked! Thank you so much.

Great post and script. I need someone to make this work via Power Query, which would be much easier for me to implement.

Can anyone explain this error:

Error in search_analytics(siteURL = website, startDate = start, endDate = end, :

could not find function “search_analytics”

Hello Ryan,

when I try to execute Your code, I receive error like this:

> service_token <-gar_auth_service("C:/token/WWW/gog_webmaster/POLISH_ALL/my_file.json")

Error in init_oauth_service_account(self$secrets, scope = self$params$scope, :

Bad Request (HTTP 400).

Do You know what kind of problem is it?

When creating sevice account, i need to choose account role. Maybe this is couse of my problem. What role I need to choose?

Try this:

Sys.setenv(“SC_AUTH_FILE” = “C:/token/WWW/gog_webmaster/POLISH_ALL/my_file.json”)

instead of:

service_token <-gar_auth_service("C:/token/WWW/gog_webmaster/POLISH_ALL/my_file.json")

Thanks for sharing the post ,I was able to pull data using the script with a little tweak as line 14 <"service_token didn’t work for me so used Sys.setenv(“SC_AUTH_FILE” instead ,which seems to work. Also was wondering if there is a way to pull the data for multiple URL(Page) instead of just one something like “page %in% pageurl” where pageurl is a list of url’s ??

Hi , I followed all these step available in the article

I faced following issue first with service_token <-gar_auth_service

error

Error in init_oauth_service_account(self$secrets, scope = self$params$scope, :

Bad Request (HTTP 400).

then i used

Sys.setenv("SC_AUTH_FILE" = "C:/token/my_file.json")

got following error

Error: Not authenticated. Run scr_auth(

I have mentioned correct file path also i have full owner access of the account

Please help

hi,

i got the same problem, and i don’t know how to proceed,

if anybody can explain the meaning of the error and can help us fix it,

I will be grateful.

has anyone found a resoliution for this issue? I encountered the same problem.

for anyone having issues you need to set the scope using options(googleAuthR.scopes.selected=c(“https://www.googleapis.com/auth/analytics”,”https://www.googleapis.com/auth/webmasters”))

Howdy Ryan, awesome stuff. I chuckled at the picture up top.

I’m curious if you have any pointers on how to modulate this a bit. I’m curious if this process can be adapted to include “searched with” information for a particular subject. I’d like to know if certain subjects are being searched in conjunction with other terms, perhaps terms that are just listed inductively. Ex: “subject X” AND “additional search term Y”, and how frequently this has occurred in the last 24 hours.

I guess my question also indicates some ignorance on my part of what exactly your excel spreadsheet here demonstrates. What I’m hoping to do is generate a spreadsheet like this, but have the “b column” be composed of co-search terms that occur in relation to a subject term/person etc. This may be what you’re already doing here in relation to your website url, but I am struggling a bit to confirm this 100%.

Thanks, and thanks in advance for your patience.

DTM

Thanks for this excellent post. Is it possible to get the “Crawl Status” from Google Search Console?